Hello folks,

I have been following the AI revolution for a time now and for a guy working with Python, you may be surprised to see the lack of content around it on my blog.

Like most of the thing I am trying to write on this blog, I am trying to bring my view and my experience on these pages, and not simply surface the thing that are openly available. There are smarter people that can go in the weeds of the things and are now fully dedicated on AI.

However, as I am following the space more and more, we are seeing that the real battle that is happening on these models is not anymore on the models themselves, but more on the data optimization to facilitate the digestion of these data points and I took the opportunity to bring a different point of view on the topic.

A small explanation on this “new generation” of AI models

When I started to get interested in the hype of the AI, I honestly could not explain it too much. I am someone working in IT and with data a lot, and I could not understand the hype that was coming out of the model such as ChatGPT.

I do not say that I did not like the ChatGPT and that it was not awesome, but I could not understand how we could really imagine that this would be AI revolution and that OpenAI had such an edge…

The reason may be that I am old enough to have known about AI and large language models for some time before ChatGPT. At that time, it was called Machine Learning and being a Data Scientist was named the sexiest job of the century.

At that time, there was a website fairly famous called Kaggle, this website still exists and if you want to get familiar with data science, it is a good way to go to.

There were Machine Learning hackathon and challenges and at that time, probably around 5-6 years before the AI revolution, Neural Network were already the hype element of machine learning.

You would basically need a neural network to win a competition, and the battle for GPU was already raging.

The LLM we are seeing today are basically a specific application of the Neural Network, and for that reason, their expansion to LMM (Large Multimodal Model) was just a matter of time.

The concept of these “new” Neural Network is very basic, the math isn’t though, so I would keep it at a global level but I thought that a quick introduction on how these models work would be benefitial.

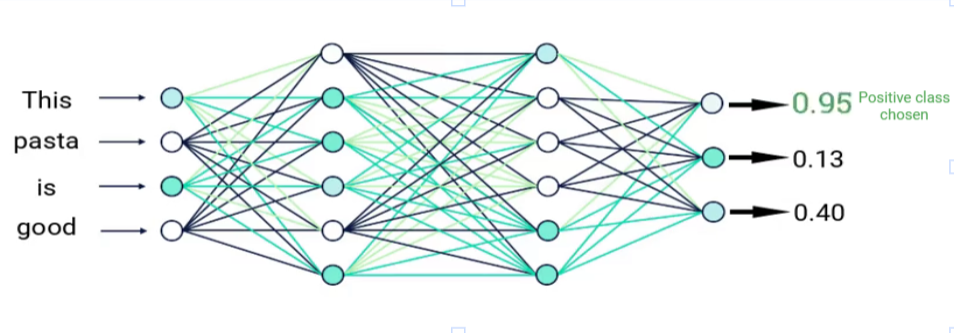

You basically have an input, this input is being transformed into readable data for the Neural Network (NN). This NN is then taking all the data points and try to deconstruct them to understand their correlation between each of them, how the different gradients that are applied to each step of the deconstruction and reconstruction would impact the final result and math the expected output.

Oversimplified representation of a neural network

The main revolution from ChatGPT was the transformer step that has been generally applied to each input, it means that any input is transformed on something that can be easily used in these model, it is called tokens and it deconstructs words into chunks and images into different bit of bytes.

It has never been questioned before that these methods understand what they are analyzing, they are just creating correlation between input and expected outputs. They have no idea what is the element they are analyzing, they just know that it is similar to some already known pattern registered during their modelization.

Since the days of machine learning, we knew that having clear data and having enough data was key to train these models.

The main things that OpenAI did (and their followers) was to scrap the internet (without restrictions) in mass and having enough capacity to compute these enormous data and as previously explained before, the usage of general transformer for data input.

Then it is just predicting what will be the next word / pixel / voice / byte depending on the input and on its data its model used.

The concept is fairly simple, and then it is specialized in language output and input. Why is that so ? Because it is the most common commodity on the internet. With all the blogs, articles, and content you can have almost unlimited training materials.

Fierce Competition

As I explained previously, the concepts are very similars between modelsand for a time, the main battle was on the computation power of these models. The big names kept investing on resources to train on more and more data.

If you did not live under a rock for the last 2 years, you may have heard that Nvidia is the main beneficiary of this boom. To get on the very high overview, Nvidia main thing is selling Graphic Processing Unit (GPU), and GPUs were mainly used in video games to calculate the graphical representation of vectors (each element of a texture you see in a video game is represented through a vector that has a specific texture).

It is a fairly complex operation but the transformation of data in the Neural Network models happens to be represented in a sort of vector as well, and especially in matrices that can leverage kind of the same computational power of these GPUs.

More GPUs = more nodes (parameters) = more calculation = better models.

This is very good reason why OpenAI (tried to) hid their models (not so open anymore), it was not because it was too dangerous, it was just that their model, once replicated can be easily replicated with the correct amount of GPU power. In a way, it was dangerous, but for them, not for humanity.

Needless to say that for smart people at Google, Facebook and elsewhere, it was “fairly easy” to replicate and to catch-up with the computation power these companies have, it was just a matter of time before they catch up on the models themselves.

The models got bigger and better very fast, for a time, it was all about size.

The Mistral and DeepSeek revolutions

Far from me the idea that I could explain all the things that Mistral and DeepSeek are doing to have an edge on all the different models already existing but I have been following the elements of these main competitors of the mainstream models (ChatGPT, Claude, Gemini).

The first thing I need to call out for these 2 is that they are open-source, which reveal what they are doing, so people smart enough to understand it can contribute, and with some limitation, allow everyone to use or replicate their setup.

As an open-source developer, I can only be happy to showcase that the power of community is rivalizing with the close models that does not push the science forward. (OpenAI being a closed organization is very fun in that aspect)

The second thing is that I followed quite a bit of people in that domain and it was clear that what Mistral and DeepSeek were very clever on their approaches.

Mistral Path

For these of view that do not know Mistral, it is an European start-up (French) that enter the AI world with a bang as they offered a model that was as performing as well as the other LLM but were half the size, so easier to manipulate.

Also they offer their model in open-source, and it was then a very good idea. The people contributing to the OpenAI model before did not get anything to work on anymore, and no main actors was publishing their model (yet*).

How did they manage that, they focus on the data quality vs the data quantity.

It was clear from the start that they could not beat Google, OpenAI and others on GPU and the size of the models or the size of data that they can scrap.

So optimizing the data entry before processing the model, cluster the elements, clean the aberration, that part was crucial to give a more refined version of what most of the models are using. Here is the clear example that data is more than ever the oil fueling the growth that we can envision via these new tools.

In a way, Mistral paved the way to the first iteration of optimization to LLM. Showing that doing the basis right (the inputs for the model) is pushing you a long way versus getting more and more resource on inneficient processes.

We already knew since the old days in Analytics that “garbage in -> garbage out” so that did not change.

DeepSeek paradigm

DeepSeek is a model from a Chinese based company, and we were saying that Mistral was the first one bringing an optimization process for their model building, DeepSeek is giving an additional optimization process.

Bigger was no more the only way to get better (for now), the threshold of data quality, to bring a very good model, is not yet defined but we are getting closer every day.

Once the data problem is resolved, what could be the next way to have an edge over the competitors?

Enter DeepSeek, the second iteration of optimization for models.

The challenge remained the same, as you cannot compete for power against main element, how do you get the edge.

DeepSeek approached was innovative as they approached it on data engineer side. We know that the concept of NN is globally the same for everyone, we know we can optimize the data, what about optimizing the way these data points can be read and loaded into these nodes/matrices.

So instead of having more GPU power for getting better model, they increase the capacity of each GPU to be able to treat more information. There are cool details you can read on the internet about their optimization (and their usage of ChatGPT for doing some heavy lifting of data distillation).

* a note around Facebook. They are also publishing their LLAMA model in some sort of Open-Source setup since they joined the fray.

What AI is not able to do (yet)

As we have seen AI is not the revolution that we hoped it would be, it is following a normal path for a capital intensive innovation. It is becoming evident that the current model has its limitation and the iteration of optimized models (DeepSeek, Mistral) will continue and this capital intensive sector will get the price down over the next few years.

Now I would like to cover some elements that AI is not there (yet) and maybe calm down the over enthusiasts:

Generating innovation

On this point, some may come and explains did exceptional discovery over the last years and that we can create new images or new videos that never existed before.

However, the way that the models work today, it is using existing data to generate new one. Everything is based on the elements that they have already analyzed and be trained on.

What AI is really good at is generating new outputs based on directions. It can create new connections and more variations that any human. It is doing the heavy lifting and most of the elements created were excellent prompt engineering and let the power of GPU do its magic.

Understanding your code

As I am writing this, there are, almost every day, posts about how computer engineering is dead or you should not learn it anymore because AI will replace everyone.

I think that this an over exageration on the AI capacity, as the AI does not understand what they are analyzing and they are not in your mind to get the details that are required and the full contraints of your application logic.

Of course, prompt engineering will help getting these details uncover but what AI will be used for is code-optimization and the design pattern and ideas to create these elements can only be generated when you know what you are doing.

On this, I tried to use the different models to make it understand aepp and because aepp is class and object base and fairly large with different dependencies and relationship… it did not work.

Generate unbias idea

At the beginning of the AI era, there have been some controversy around the different AI agents that became nazi or explaining non-sense.

In some way, there could always be a way to hack an AI agent, they are all based on their data, and if you screw that data in some way or form, it would eventually lead to the quality issue we discussed previously.

As more and more content is created via AI helped, we are entering into a very dangerous feedback loop that new content will be generated by the same algorithm that will then look back at that data for verification. You see the problem happening.

It is also good noticing that some websites are now designed to lure crawler into getting fake data or wrong data and bias the AI agents.

I think that this kind of AI vs Human data will become more and more prevalent as we will better understand the limitation of the current Neural Network concepts.

I hope this article was helpful for you to know about the current state of AI and why data is the core fondation of your AI capability. It also provides you with honest view on its capability and try to give a fair assessment on what we can expect for it.

AI is a tool, and a great one and I believe that understanding how and when to use that tool is very important.

If you have any elements that I am not covering (I mean there are a lot, but big misses) and don’t hesitate to comment and talk to you soon.